As web scraping is becoming easier to use, more and more people are able to leverage the world’s web resources. As this trend grows, structured data from the web empower businesses and enable a wave of new business ideas to become a reality. Now there is a new technology on the market called: “self-contained agents” that might just make this a tsunami!

- Popular uses are as web macro recorder, form filler on steroids and highly-secure password manager (256-bit encryption). Web developers use iMacros for web regression testing, performance testing and web transaction monitoring. It can also be combined with Google Speed Tracer, Firebug and other web development and test tools.

- Webscraper.io is a web scraping tool provider with a Chrome browser extension and a Firefox add-on. The webScraper.io Chrome extension is one of the best web scrapers you can install as a Chrome extension. With over 300,000 downloads – and impressive customer reviews in the store, this extension is a must-have for web scrapers.

Self-contained agents are web scraping agents that are compiled and packaged with the web scraping software runtime so they are standalone.

When Content Grabber launched early this year, it included the ability to create scraping agents and export them as self-contained agents. This gives developers the ability to build self-contained web scraping agents which they can run independently and royalty free from the licensed software. If they buy the Content Grabber Premium Edition license, they can also white label these as their own.

All of a sudden, that agent you developed to solve a problem at work, can now be packaged as a self-contained agent and used to create new revenue streams for your business.

Your scraping agent online in minutes Our hosted scraping app, point-and-click Chrome extension makes real time data extraction quick & easy. Trove Agent - Web Scraping & Automation has disclosed the following information regarding the collection and usage of your data. More detailed information can be found in the publisher's privacy policy. Trove Agent - Web Scraping & Automation collects the following.

Work out an agent

How to create self-contained agents

Both the Professional and Premium Edition licenses of Content Grabber allow users to create self-contained agents. From the main Menu, simply choose File ->Export Agent and select the option Create Self-Contained agent in the Export Agent Window.

Before clicking Export to create the self-contained agent, you can click Customize Design to customize the agent UI components and text being displayed.

Customize agent GUI

A self-contained agent includes a user interface that allows users to configure and run the agent. Users can control some of the text and images displayed on this user interface. Also, one may control which agent options the future user can configure.

The Customize Self-Contained Agent screen has three tabs, with which one can specify the text and images that will be used on the user interface (UI) of the self-contained agent.

The Templates tab also allows you to specify custom template files. (The standard configuration screens of a self-contained agent include Content Grabber promotion). Set your custom HTML display templates to add your own designs to the screens. Thus you white label your self-contained agent.

Input data

Web Scraper Extension Chrome

A self-contained agent can use any input data that is supplied by a database or CSV file. An agent can also have multiple public data providers, and each data provider can be selected from a drop-down box as shown below.

Output data to file formats only

A self-contained agent is limited with export to the only file formats: Excel, CSV or XML. It cannot export to databases or execute a data export script.

If you need to export data to a database or use an export script, you can build you own custom application using the Content Grabber runtime, or run your agents using the Content Grabber command-line tool. Both the runtime and the command-line tool can be distributed royalty free if you own a Content Grabber Premium Edition license.

Upgrading a Self-Contained Agent

Users can upgrade an agent if the target website of a self-contained agent has changed and one needs to make changes to the agent in order for it to work correctly. Simply make the changes to the existing agent within Content and export the agent again. The end-user can overwrite their existing self-contained agent with the new agent. Any configuration changes the end-user has made to the agent will not be lost.

Drawback

The drawback of stand-alone agents is that each agent will be of a minimum size of approx 4MB no matter the complexity of an agent, because that’s the minimum size of the Content Grabber runtime environment included into it.

The self-contained agents can run without Content Grabber installed, but they do depend on Interner Explorer and .NET 4+, which will be pre-installed on the most newer versions of Windows.

Summary

Content Grabber’s self-contained agents are a very exciting new tool for developers and business entrepreneurs. They can be easily packaged as standalone tools and branded as your own. They’re relatively light weight and portable online or by email – the packaged Content Grabber runtime is usually less than 5Mb in size. Also, as they are an executable, there is also no installation process required.

Self-contained agents have now created a new reason for developers to use web scraping software over cloud-based solutions. It will be interesting to see how this takes off.

Article content

Even though you can see quite a lot of specialists advising to use user agents for scraping, it’s not a very common practice. Yet, such a simple addition as a user agent (abbreviated to UA) can make a huge difference by automating and streamlining data gathering. So if you never used such a tool, here is your sign that you should try it.

What are user agents?

A user agent is an identifier that the destination server uses to understand which browser, operating system, and device the given visitor is using.

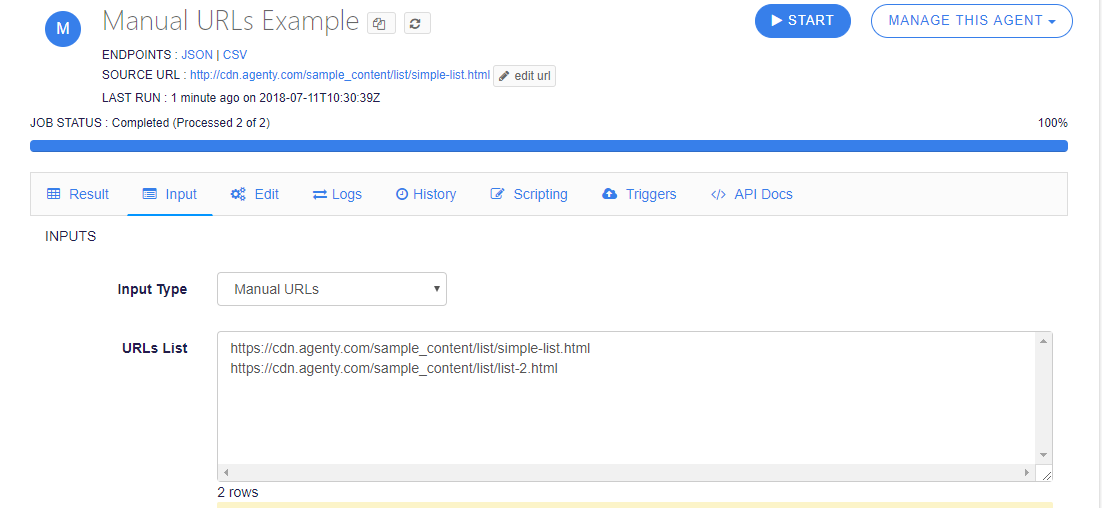

Agenty Web Scraping

Mozilla's developer portal provides a helpful overview of what kind of information user agents typically contain:

Here's what an iPhone user agent looks like:

If you look at a UA, you will see just a text string that contains all the necessary information. The client sends this data through headers of a request every time a connection with the destination server is established. Then, the server will prepare a response that is suitable for a specific combination of a browser, operating system, and device.

Here is an example of how it works: When you pop on Facebook using your laptop, you will be presented with a desktop version of this website. Try using a browser on your smartphone for this — and you’ll see a mobile version. A server understands which version to show thanks to a user agent it receives.

Since a user agent is just a string of text, it’s not difficult to change it and trick the destination server. That’s why it’s useful to add user agents to the web scraping process — to make servers believe they’re being visited by different users from different devices.

Why is it important to use user agents?

As we’ve mentioned, user agents are not used very often for web scraping. But it would be smarter to add this tool to your array of scraping instruments, especially considering how advanced anti-scraping technologies have become. If even a couple of years ago we could neglect user agents and have a rather smooth data gathering process using only a scraper and proxies, today the lack of a user agent library will most likely make us face constant bans.

A web scraper by default sends requests without a user agent, and that’s very suspicious for servers. They can instantly understand they’re dealing with a bot if a request doesn’t provide data about a user. So it’s much better to add this extra step and start using a library of user agents if you want to gather data efficiently.

How to achieve the best results with user agents?

Using this tool won’t give you the smooth process you desire if you just apply user agents without analyzing its strong and weak points. Here are some tips that will help you get the most out of them.

Opt for popular user agents

You can find different libraries of user agents, and it’s better to choose popular ones. Servers become very suspicious of UAs that don’t belong to major browsers, and most likely, they will block such requests. Also, stick to user agents that match the browser you’re using for scraping to make them match the default behavior of this browser.

Rotate both proxies and user agents

It’s important to rotate proxies during web scraping to change IP addresses and make a destination server believe that requests are sent from different users. The same rule works for user agents. If you just stick to the same UA for several requests, you will inevitably get blocked.

Rotate user agents with each request just like you do it with proxies to achieve convincing requests that won’t make a destination server suspect it’s dealing with a bot. Usually, UA rotation is performed with Python and Selenium, and you will find numerous detailed guides online that will help you master this tool.

The bottom line

No matter how advanced a scraper is and how well it can deal with CAPTCHAs, you still need to improve it with proxies and user agent libraries. Both tools require rotation that will assign a new proxy and UA to each request. Once you have the rotation automated, you will achieve the smoothest data gathering process.